Docker 00 - What is Docker?

Docker is a pivotal tool in the world of software development, streamlining the build, ship, and run lifecycle. It was first released in 2013. Docker is an OS-level virtualization platform. In the OS-level virtualization, the kernel of an operating system allows for multiple isolated user space instances. Unlike a hypervisor, which runs multiple full operating systems, Docker runs on a single Linux instance and only requires a single operating system. This makes it lightweight and efficient, enabling the rapid deployment of applications across diverse environments. Also, a running container can only see the resources that are allocated to it, unlike an ordinary operating system can see all the resources of the host machine.

Docker Image

A Docker image, also known as an ‘OCI Image’, is essentially a blueprint for creating a running application. It is defined from a Dockerfile, a text document containing sequential instructions akin to a shell script. These instructions layer together to form a comprehensive package, encapsulating not only the application but also all its dependencies. This methodology ensures that an application functions uniformly across diverse environments, effectively solving the notorious “works on my machine” dilemma. This all-encompassing approach excludes only the operating system’s kernel and hardware drivers.

Docker Registry

Docker registry operates as a package manager, similar to apt or npm, enabling users to ‘push’ their Docker images to the registry and then ‘pull’ these images onto different machines. This system is efficient, as it only transfers the differing layers of an image, not the entire file. Additionally, it leverages local caching to expedite the process of deploying containers. Through the Docker registry, teams can ensure that the same image is utilized across all environments, from development to production.

Docker Container

When a Docker image is launched, it becomes a Docker container. This container is a live, isolated environment where the application runs. Docker employs specific Linux Kernel features, namely namespaces and cgroups (control groups), to achieve this isolation. As a result, each containerized application has access only to its own files and processes, and it operates with its own private network interface. This is not traditional virtualization but a form of lightweight application isolation. It allows multiple containers derived from the same image or different images to run simultaneously on the same host machine without interfering with one another.

Container vs Virtual Machine

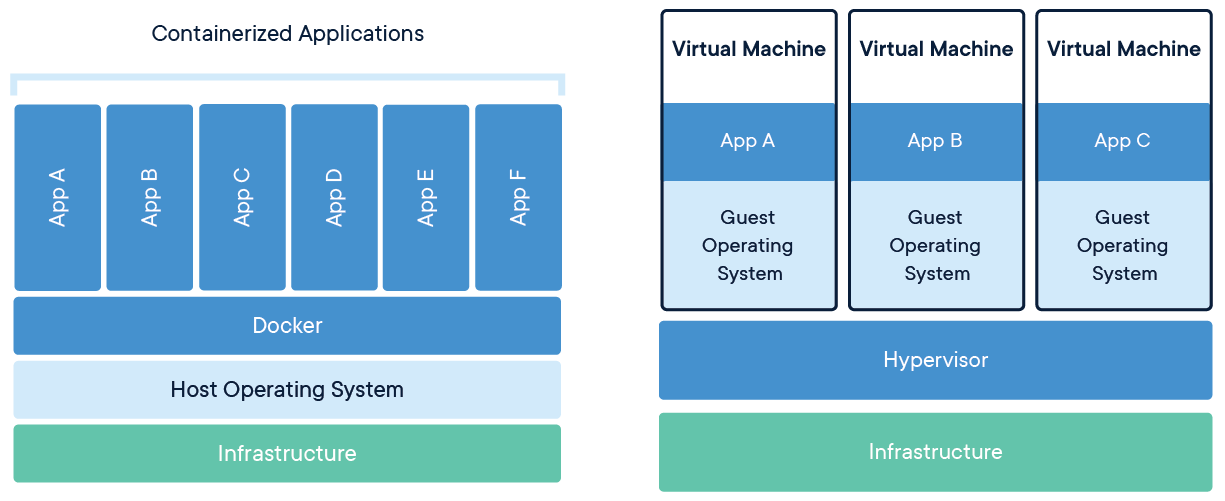

Containers and virtual machines (VMs) are both popular technologies used in software development and deployment. While they serve similar purposes, there are key differences between the two.

Virtual Machines (VMs)

A virtual machine is an emulation of a computer system. VMs run on top of a hypervisor, which is a software layer that abstracts the physical hardware and allows multiple operating systems to run on the same physical machine. Each VM contains the application and necessary binaries and libraries, along with a complete operating system. Because of this, VMs are more resource-intensive and slower to start compared to containers.

Containers

Containers run on top of an operating system’s kernel and share the host operating system’s resources. Containers share the OS kernel with others but each container runs as isolated processes in user space.

Key Differences

- Resource Utilization: Virtual machines require a separate operating system for each instance, resulting in higher resource usage. Containers share the host operating system, leading to better resource utilization.

- Isolation: Virtual machines provide stronger isolation as each instance has its own operating system. Containers share the host operating system, which can lead to potential security risks if not properly configured.

- Portability: Containers are highly portable and can be easily moved between different environments. Virtual machines are less portable due to their dependency on specific hypervisors.

- Performance: Containers have lower overhead and faster startup times compared to virtual machines.

- Use Cases: Virtual machines are commonly used for running multiple operating systems, hosting legacy applications, and providing strong isolation. Containers are ideal for microservices architectures, application deployment, and scaling.

Reference

Enjoy Reading This Article?

Here are some more articles you might like to read next: